In the last several decades, when people consider building a knowledge-related solution, they tend to look into two distinct directions based on whether data is structured or unstructured. Accordingly, there is the so-called structured search using a query language over a database or semantic search using inferencing and reasoning over the meaning of data, mostly text.

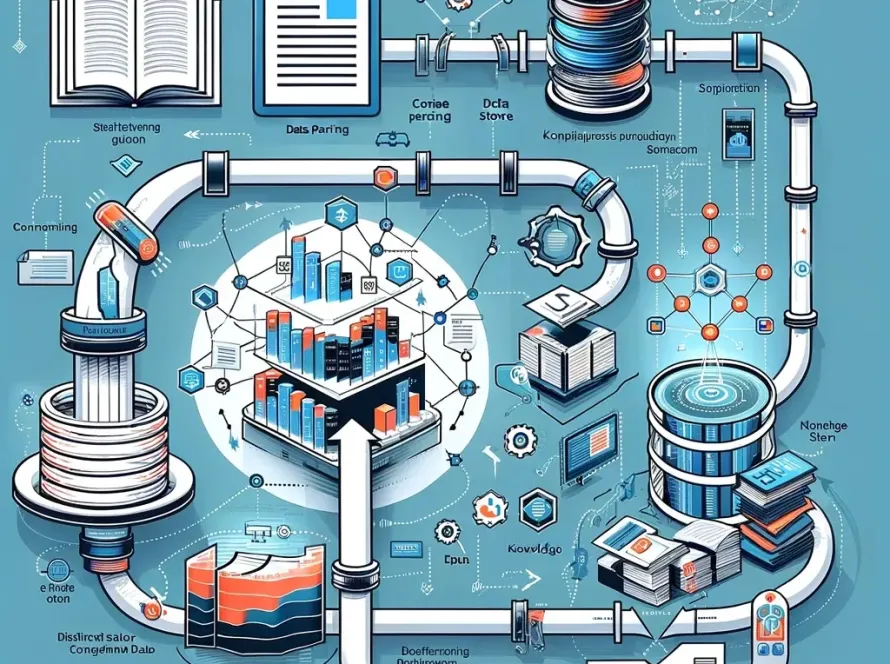

There are also methods to bring certain structures into unstructured data via technologies like entity extraction, entity linking, relation extraction, and so on in order to achieve better accuracy and performance. With the rise of Large Language Models (LLM), there is a new and promising way to bring the two into ONE, i.e., to store both structure and meaning (in the form of vectors created by the text embedding process) in a knowledge graph (KG). We will further explore this idea with an example.

Read the full article on Medium: link

The future (of KG): With the above analysis, we envision a KG that encodes knowledge both in the form of knowledge triples and in the form of LLM embeddings, where the former are easier to use for human understanding and explainability, whereas the latter is easier for machine comprehension.

Luna Dong (source)