Unlocking new capabilities in large language models with MindMap: Integrating knowledge graphs for superior reasoning and accurate responses.

A paper review of MindMap: Knowledge Graph Prompting Sparks Graph of Thoughts in Large Language Models

Source: arxiv

Our method enables LLMs to comprehend KG inputs and infer with a combination of implicit and external knowledge.

from the article

Overview:

This paper introduces MindMap, a method that uses knowledge graphs (KGs) to enhance the reasoning capabilities of large language models (LLMs). By integrating KGs with LLMs, MindMap enables the models to access up-to-date knowledge and generate more accurate and interpretable responses. The study demonstrates significant improvements in question-answering tasks across various datasets.

Key Concepts and Definitions:

- Large Language Models (LLMs): AI models trained on extensive text data to generate human-like text and perform complex reasoning tasks.

- Knowledge Graphs (KGs): Structured representations of knowledge using entities (nodes) and relationships (edges) to provide explicit, interpretable information.

- MindMap: A framework that integrates KGs with LLMs to enhance their reasoning and accuracy by creating a “graph of thoughts”.

Limitations and Problems of Existing Methods:

- Inflexibility: Pre-trained LLMs possess outdated knowledge and are challenging to update without risking catastrophic forgetting.

- Hallucinations: LLMs often produce plausible-sounding but incorrect outputs, posing risks in high-stakes applications like medical diagnosis.

- Lack of Transparency: The black-box nature of LLMs makes it difficult to validate their knowledge and understand their reasoning processes.

Approaches:

MindMap Framework:

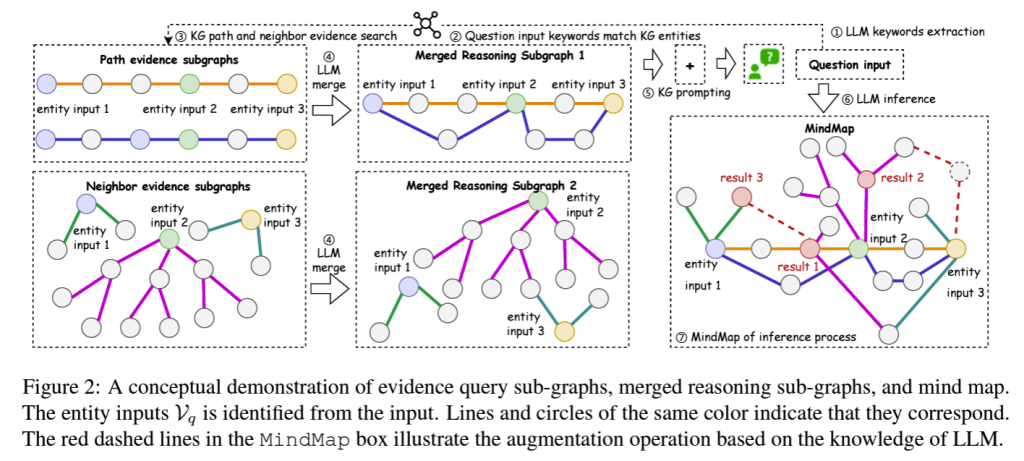

- Evidence Graph Mining: Extracts key entities from queries and builds evidence sub-graphs from the source KG.

- Evidence Graph Aggregation: Aggregates sub-graphs into a merged reasoning graph, providing a comprehensive view of the evidence.

- LLM Reasoning with MindMap: Prompts LLMs to use the merged graph and their implicit knowledge to generate answers, creating a transparent reasoning pathway.

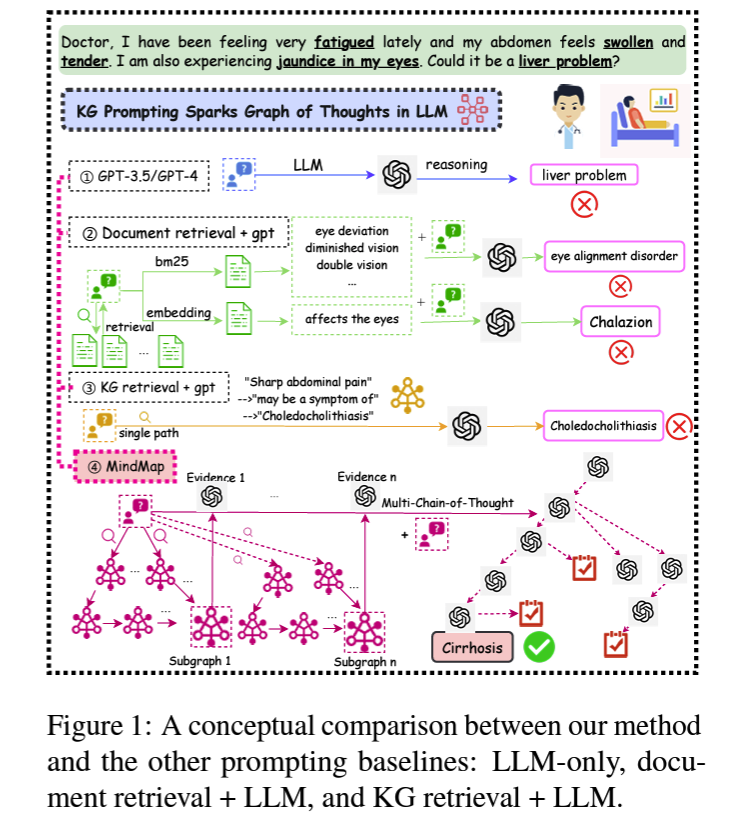

Comparison to Existing Methods:

- Retrieval-Augmented Generation: MindMap surpasses traditional document retrieval methods by leveraging the structured information in KGs, resulting in more accurate and concise responses.

- Graph Mining with LLMs: Unlike previous approaches, MindMap focuses on text generation tasks requiring complex reasoning across multiple evidence graphs.

Important Visuals:

MindMap Framework Overview

Evidence Graph Mining Process

Architecture of MindMap

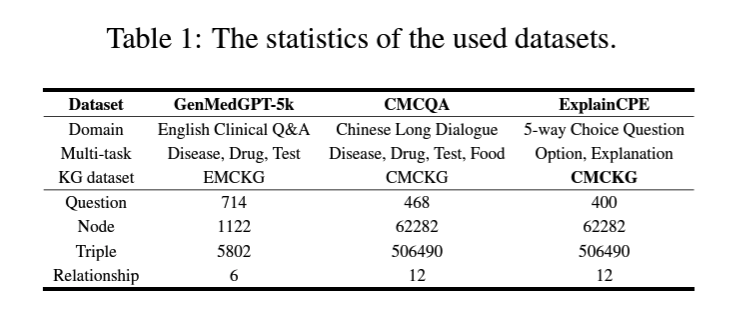

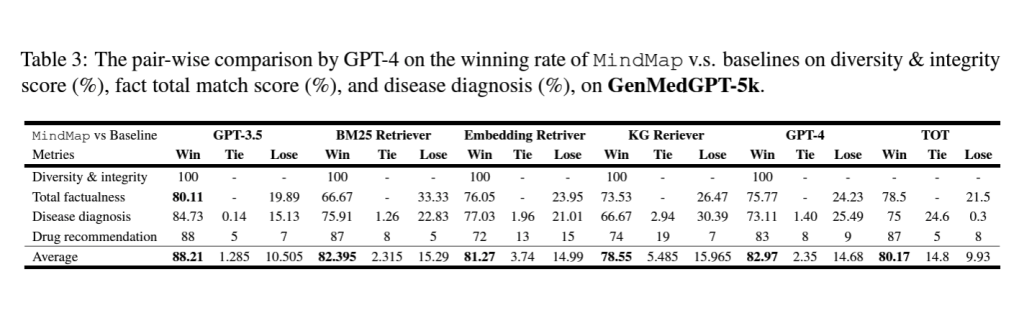

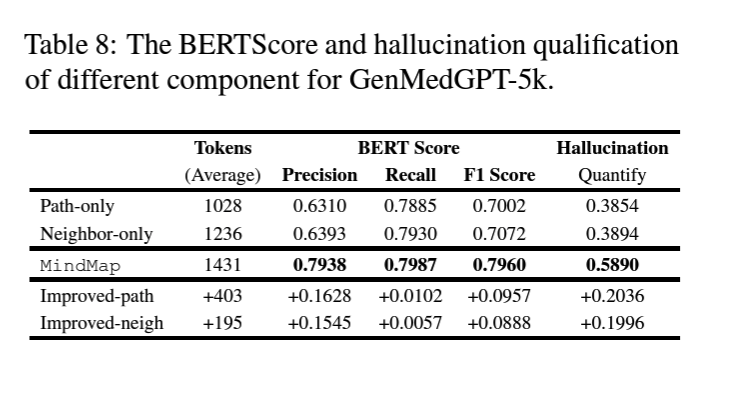

Significant Tables:

In-depth Analysis

How does MindMap perform without correct KG knowledge?

In Figure 4(c) (Appendix F), when faced with a question where GPT-3.5 is accurate but KG Retriever errs, MindMap achieves an accuracy rate of 55%. We attribute the low accuracy of the KG Retriever to its inability to retrieve the necessary knowledge for problem-solving. MindMap effectively addresses such instances by leveraging the LLMinherent knowledge, identifying pertinent external explicit knowledge, and seamlessly integrating it into a unified graph structure.

How robust is MindMap to unmatched fact queries?

The question in Figure 6 (Appendix F) contains misleading symptom facts, such as ‘jaundice in my eyes’ leading baseline models to retrieve irrelevant knowledge linked to ‘eye’. This results in failure to identify the correct disease, with recommended drugs and tests unrelated to liver disease. In contrast, our model MindMap accurately identifies cirrhosis’ and recommends the relevant ‘blood test’ showcasing its robustness.

How does MindMap aggregate evidence graphs considering entity semantics?

In Figure 7 of Appendix F, nodes like ‘vaginitis’ and ‘atrophic vaginitis’ are present in different evidence sub-graphs but share a semantic identity. MindMap allows LLMs to disambiguate and merge these diverse evidence graphs for more effective reasoning. The resulting mind maps also map entities back to the input evidence graphs. Additionally, Figure 7 illustrates the GPT-4 rater’s preference for total factual correctness and disease diagnosis factual correctness across methods. Notably, MindMap is highlighted for providing more specific disease diagnosis results compared to the baseline, which offers vague mentions and lacks treatment options. In terms of disease diagnosis factual correctness, the GPT-4 rater observes that MindMap aligns better with the ground truth.

How does MindMap visualize the inference process and evidence sources?

Figure 8 in Appendix F presents a comprehensive response to a CMCQA question. It includes a summary, an inference process, and a mind map. The summary extracts the accurate result from the mind map, while the inference process displays multiple reasoning chains from the entities on the evidence graph Gm. The mind map combines all the inference chains into a reasoning graph, providing an intuitive understanding of knowledge connections in each step and the sources of evidence sub-graphs.

How does MindMap leverage LLM knowledge for various tasks?

Figure 4 in Appendix F illustrates MindMap’s performance on diverse question types. For drugrelated questions (a) and (d), which demand indepth knowledge, MindMap outperforms other methods. Disease-related questions (b) and (f) show comparable results between retrieval methods and MindMap, indicating that incorporating external knowledge mitigates errors in language model outputs. Notably, for general knowledge questions (c), LLMs like GPT-3.5 perform better, while retrieval methods lag. This suggests that retrieval methods may overlook the knowledge embedded in LLMs. Conversely, MindMap performs as well as GPT-3.5 in handling general knowledge questions, highlighting its effectiveness in synergizing LLMand KGknowledge for adaptable inference across datasets with varying KG fact accuracies.

Conclusion:

MindMap represents a significant advancement in leveraging KGs to enhance LLMs, improving their ability to generate accurate and interpretable responses. This method demonstrates the potential to transform how LLMs handle complex reasoning tasks by integrating explicit, structured knowledge from KGs.

For a deeper dive into this innovative research, read the full paper here.

Stay updated with the latest in AI and knowledge graph research! Follow me on Medium and join the discussion below.

#AI #KnowledgeGraph #MachineLearning #NaturalLanguageProcessing #DataScience #MindMap